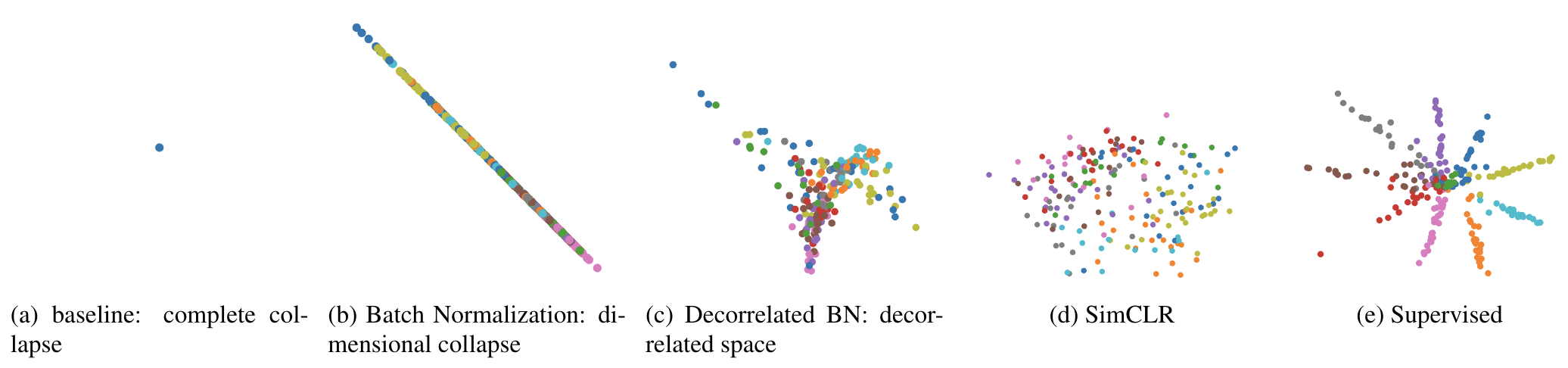

Figure 1: An overview of the key components of this work: (a) is a sketch of the framework used in this work; (b) and (c) are two reachable collapse patterns in self-supervised settings; (d) is an illustration of the goal of feature decorrelation; (d) is a sketch of the framework used in this work.

Abstract

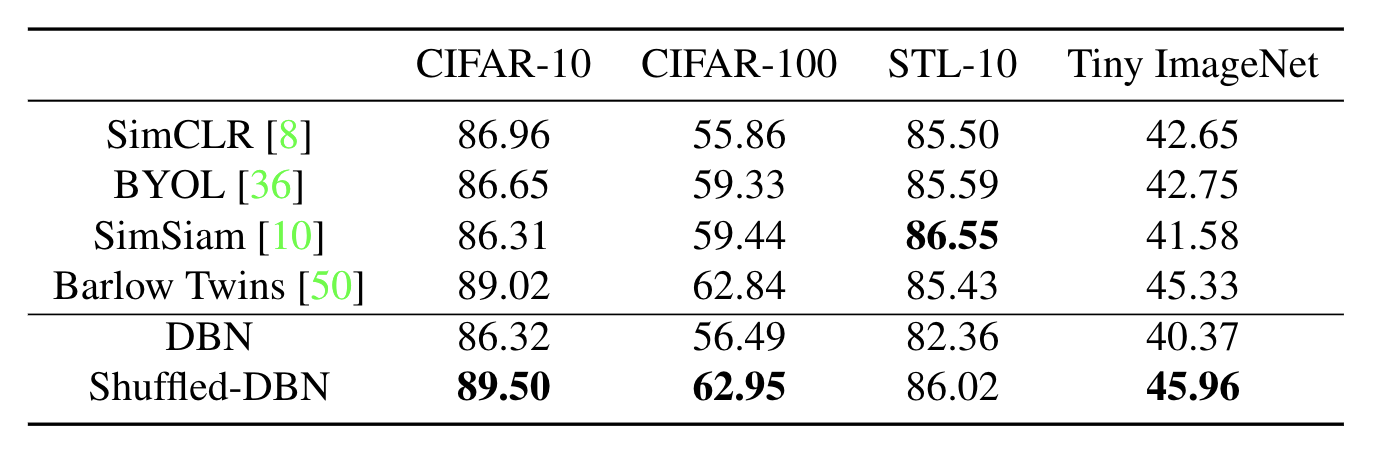

In self-supervised representation learning, a common idea behind most of the state-of-the-art approaches is to enforce the robustness of the representations to predefined augmentations. A potential issue of this idea is the existence of completely collapsed solutions (i.e., constant features), which are typically avoided implicitly by carefully chosen implementation details. In this work, we study a relatively concise framework containing the most common components from recent approaches. We verify the existence of complete collapse and discover another reachable collapse pattern that is usually overlooked, namely dimensional collapse. We connect dimensional collapse with strong correlations between axes and consider such connection as a strong motivation for decorrelation (i.e., standardizing the covariance matrix). The capability of correlation as an unsupervised metric and the gains from feature decorrelation are verified empirically to highlight the importance and the potential of this insight.