| ICRA 2022 (long version) | CVPR 2021 Workshop (best paper nominee) | Code |

| ICRA 2022 (long version) | CVPR 2021 Workshop (best paper nominee) | Code |

|

|

|

|

|

|

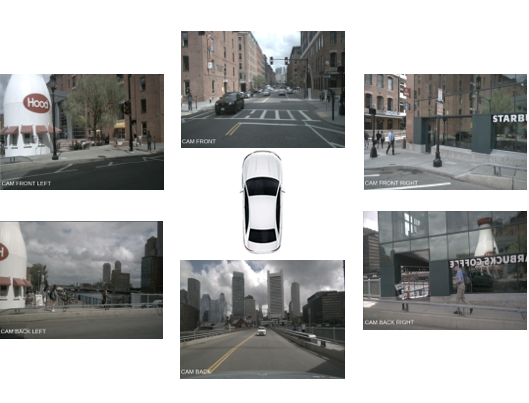

The vectorized HD Map predicted by HDMapNet. Red line: lane boundary; White line: lane divider; Yellow line: pedestrian crossing. We overlay LiDAR point clouds over the map only for visualization purpose.

|

|

|

|

|

|

Long-term temporal accumulation by pasting feature maps of previous frames into current's according to ego poses. The feature maps are fused by max pooling and then fed into decoder.

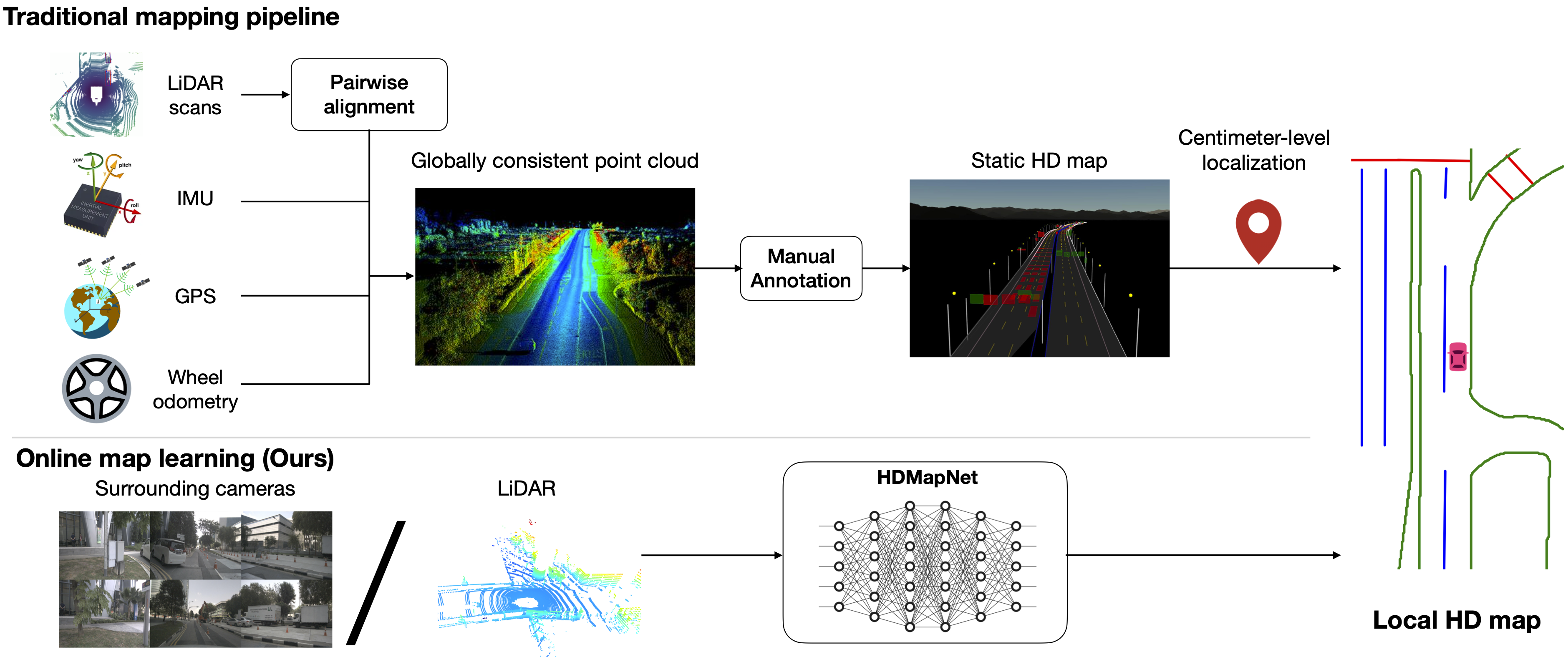

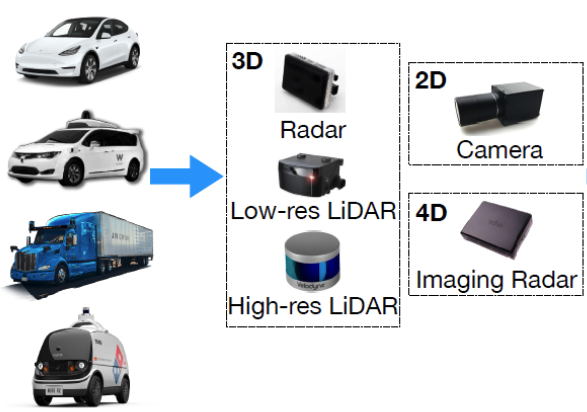

Main idea. High-definition map (HD map) construction is a crucial problem for autonomous driving. This problem typically involves collecting high-quality point clouds, fusing multiple point clouds of the same scene, annotating map elements, and updating maps constantly. This pipeline, however, requires a vast amount of human efforts and resources which limits its scalability. In this paper, we argue that online map learning, which dynamically constructs the HD maps based on local sensor observations, is a more scalable way to provide semantic and geometry priors to self-driving vehicles than traditional pre-annotated HD maps. We introduce a strong online map learning method, titled HDMapNet.

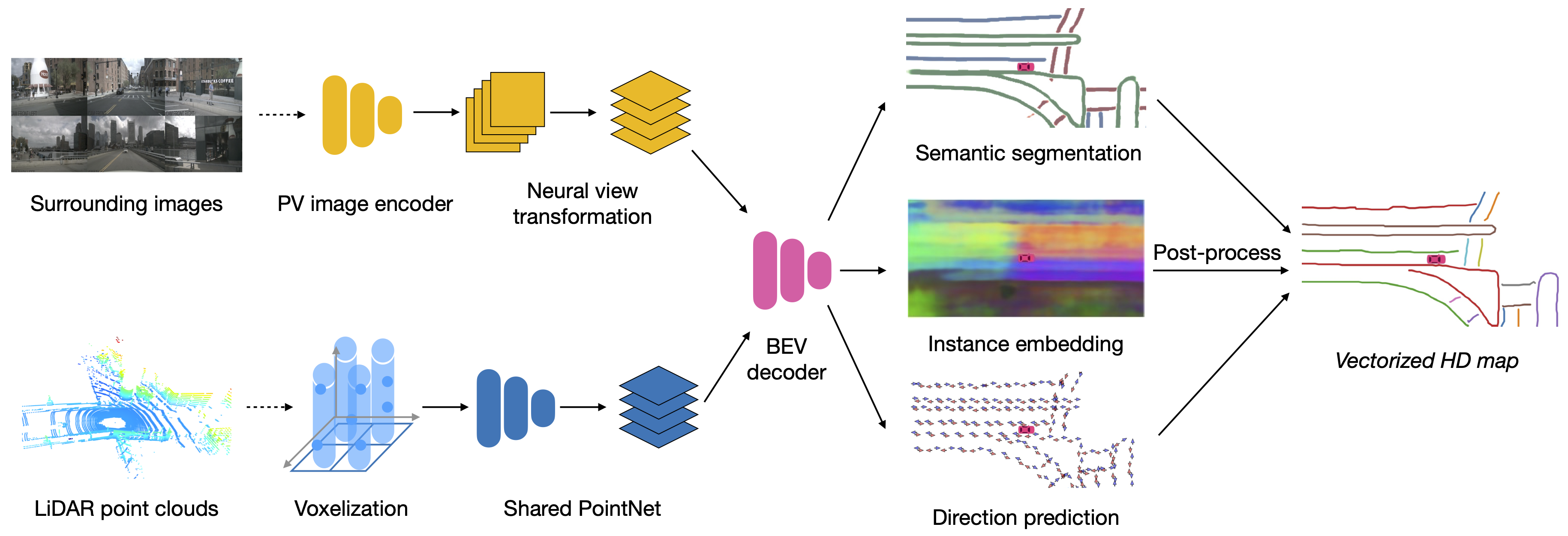

The overview of HDMapNet. Four modules parameterize HDMapNet: a perspective view image encoder, a neural view transformer in image branch, a pillar-based point cloud encoder, and a map element decoder. The output of the map decoder has 3 branches: semantic segmentation, instance detection and direction classification, which are processed into vectorized HD map.

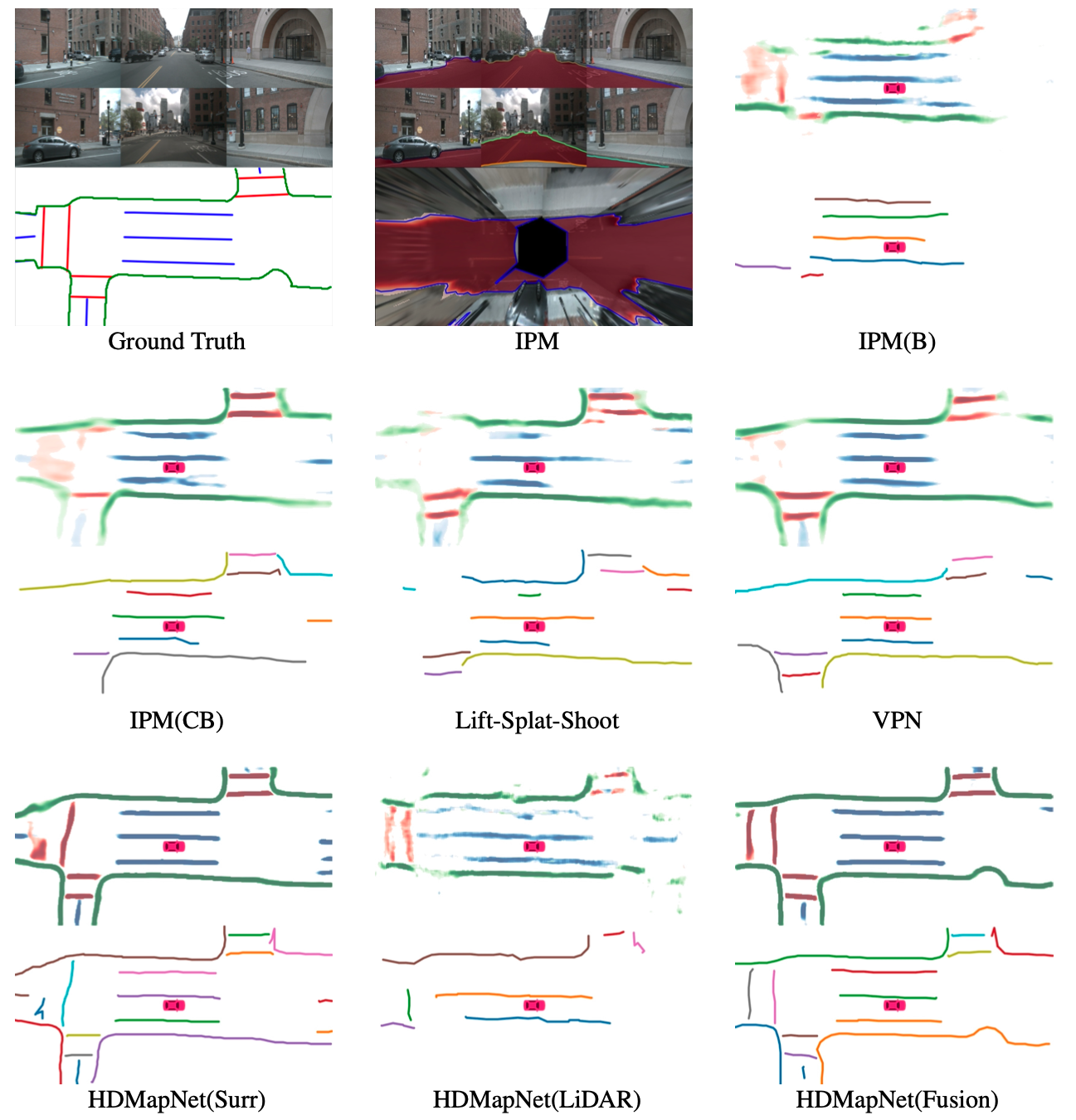

Comparison. Top Left: surround-vew images and the ground-truth HD map. IPM: lane segmentation results in the perspective view and in the bird's-eye view. Others: semantic segmentation results and vectorized instance detection results. As we can see, HDMapNet(Surr) and HDMapNet(Fusion) are achieving outstanding performance.

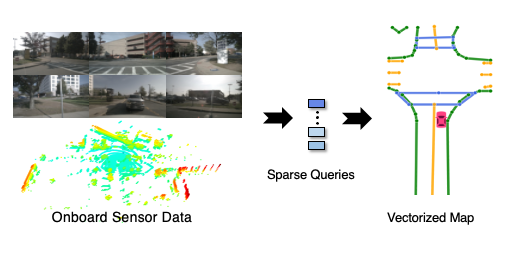

BEV Vectorized Mapping VectorMapNet |

BEV Detection DETR3D |

BEV Fusion FUTR3D |

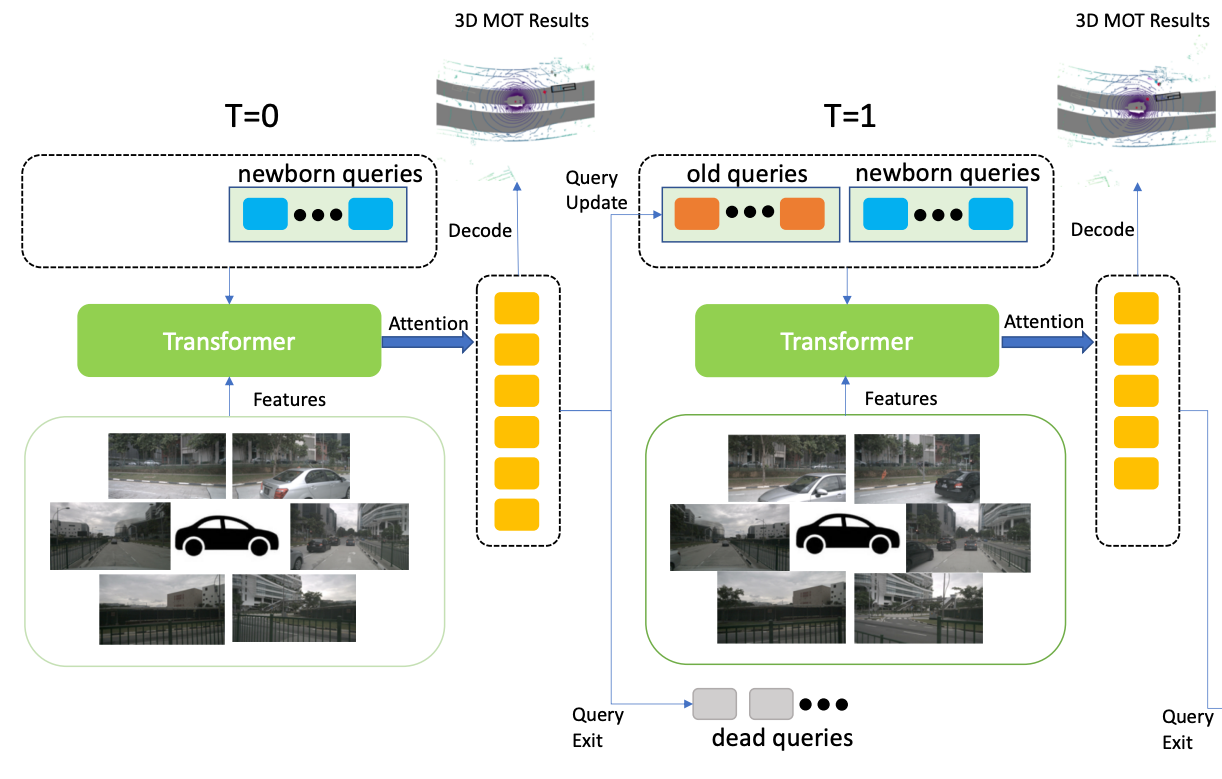

BEV Tracking MUTR3D |

If you find our work useful in your research, please cite our paper:

@article{li2021hdmapnet,

title={HDMapNet: An Online HD Map Construction and Evaluation Framework},

author={Qi Li and Yue Wang and Yilun Wang and Hang Zhao},

journal={arXiv preprint arXiv:2107.06307},

year={2021}

}